Extension’s bias toward public value

Key to making the case for funding for Extension is our ability to explain why Extension–and not some other public or nonprofit organization–should provide programming aimed at improving conditions in the state. In other words, we need to answer the “Why Extension?” question. When I ask Extension professionals to name Extension’s strengths relative to other possible program providers, the first response is usually that Extension provides sound, unbiased, research-based programs. Case closed, right?

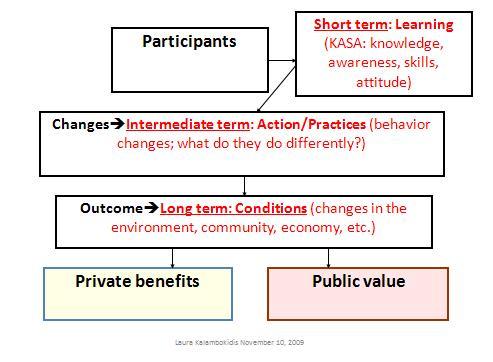

At a recent workshop for University of Wisconsin Extension’s Western Region, this question arose: Can we really say that Extension has no bias? We do not have a profit motive, like private sector service providers. And we do not have specific mandates, like many local government service providers. But, can we say that our program content that has no bias at all? Isn’t striving to improve conditions in the state a bias? Isn’t striving for public value a bias? Isn’t using scientific research as a base from programming a bias?

This discussion brought two things to the forefront for me. First, we need some language other than “unbiased” to describe Extension programming. “Motivated by the public good”? “Based on the best scientific knowledge”? “Designed to create public value”? I’m not yet sure what the answer is…Second, for whatever descriptor we use, we need to ensure that Extension programming actually fits the descriptor. We need to be certain that we are doing whatever it is that separates Extension from other program providers.