Program evaluation

Private benefits from short-term outcomes

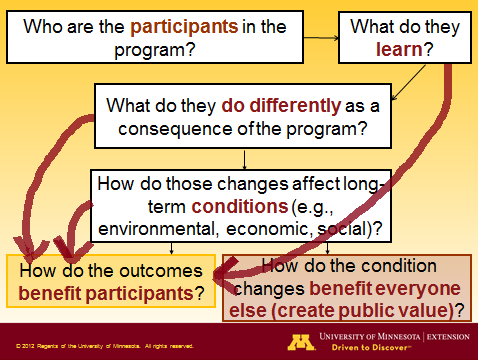

The students in my recent lecture for the Penn State University Agricultural Extension and Education program asked me several useful questions. One was whether the public value message structure (below) implied that private benefits can only be accrued after condition changes have occurred. Note that the thin, black arrow leading to the participant’s private benefits implies exactly this.

In fact, I think the original construction–with the arrow extending from condition changes to private benefits–misleads. A program participant may very well enjoy benefits long before conditions have improved. Indeed, many participants directly benefit from improvements in knowledge and skills. For example, a participant in leadership development program may see personal career benefits from the enhanced leadership and facilitation abilities he acquired through the program. And this may occur well before the program can claim improvements in community conditions. So, I wonder if the diagram should be redrawn (as I did above) to indicate that those private benefits can, indeed, arise from the intermediate stages in public value creation. What do you think (besides that I need to employ someone with better graphic design skills than mine. :))?

How long is the long-term?

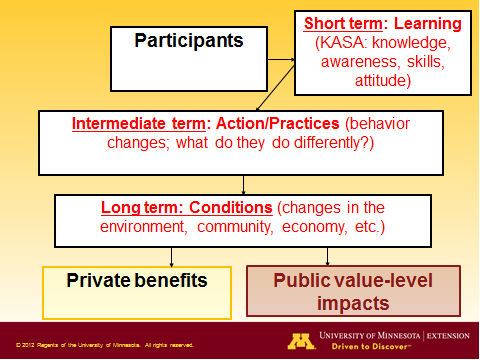

In the diagram below, I map the outcomes section of the University of Wisconsin Extension logic model to the components of a public value message. In the parlance of the UWEX model, learning outcomes are short-term, behavior changes are medium-term, and condition changes are long-term.

Participants in two recent seminars–one for the UM Center for Integrative Leadership and one for Penn State University’s Agricultural Extension and Education program–challenged this pattern. They argued that some programs may generate public-value-level outcomes in less time than it takes other programs to generate behavior changes. In these cases, doesn’t labeling the outcomes as short-, medium-, and long-term cause confusion?

I think this is a useful point. What matters for the generation of public value is that the desired community-level condition changes are achieved, not how long it took to get there. If a program is able to alter economic, social, civic, or environmental conditions in ways that matter to critical stakeholders, then those impacts can be the basis of a public value message, even if they arose in the course of weeks or months, rather than the years or generations it may take for other programs to see impacts.

Does public value magnitude matter?

Is it enough for a stakeholder to learn that your program produced public value, or do stakeholders want to know how much value was created? Put another way, is it adequate to demonstrate that a program has a positive return on investment for a community? Or does it have to have a higher return than all the alternative investments the community could have made?

I was asked this question today at a Center for Integrative Leadership Research Forum where I presented, “How Cross Sector Leadership Builds Extension’s Public Value.” It seems that the answer has to be yes, it does matter whether a program generates a large or small amount of public benefit relative to its cost.

A potential funder wants to direct dollars toward their highest use. Ideally, all programs would report a return using a common metric. The metric could be dollars for programs whose impacts can be monetized (i.e., converted to dollars); it could be some other common metric (e.g., amount of pollution remediation, high school graduation rate) for programs addressing similar issues. With common metrics, a funder could rank programs by return on investment and fund as many of the top programs as they can.

Such apples-to-apples comparisons must be rare, though, even for programs with common objectives. I also imagine that the magnitude of a programs’ expected public value–if it is known–will inform, but not drive a funder’s decision.

What has your experience been? Have you sought funding from a source that demands to know the expected return on their investment in terms of dollars or some other metric? Do you know of funders that use those metrics to rank programs by return?

Working Differently in Extension Podcast

Interested in a short introduction to “Building Extension’s Public Value”? Check out this Working Differently in Extension Podcast, featuring a conversation between Bob Bertsch of Agricultural Communication at North Dakota State University and me. If you’d like to actually see us converse, check out the video of the podcast below.

Evaluation and accountability

Last month at the 2013 AAEA Annual Conference, I was one of three presenters in a concurrent session on “Creating and Documenting Extension Programs with Public Value-Level Impacts.” I learned a lot from both of my co-presenters, but here are just two quick ideas I took away from the session:

From Jo Ann Warner of the Western Center for Risk Management Education: As a funder, the WCRME demands evaluation plans–and ultimately results–from grant applicants. But the Center itself is accountable to its own funders, who demand that the Center demonstrate the effectiveness of the programs they have funded. So generally, grant applicants should keep in mind to whom the granting agency is accountable and provide the kind of results that will help the agency demonstrate effectiveness.

From Kynda Curtis of Utah State University: Intimidated about evaluating the effectiveness of your extension program? Don’t be! It’s not as hard as you think it is, won’t take as much additional time as you think it will, and costs less than you expect. No excuses!

Critical conversation about public value

The participants make a number of interesting points about where Extension program evaluation has been and is headed. Several of my own take-aways from the video influenced my recent presentations at the 2013 AAEA Annual conference and the APLU meeting on Measuring Excellence in Extension. I’ve paraphrased three of those insights below.

==Nancy Franz: Extension should work toward changing the unit of program evaluation analysis from the individual to the system.

==Mary Arnold: Extension is still too often limiting evaluation to private value and isolated efforts. We need to move closer to systems-level evaluation.

==Sarah Baughman: We need to do more program evaluation for program improvement, not only evaluation for accountability.

Mark Moore: Recognizing Public Value

My UM Extension colleague Neil Linscheid has alerted me to this recording of Harvard Kennedy School’s Mark Moore discussing his new book, Recognizing Public Value. I haven’t read the book yet, but I’ve ordered it from the library, and I’ll write more once I’ve read it. However, I can share some quick impressions from having listened to the recording of Moore’s talk.

Both Moore and his discussant, Tiziana Dearing of the Boston College School of Social Work, commented on the limitations of monetizing public value created by service providers. Starting around 28:00 of the recording, Moore explains that to measure the value created by government, we are often challenged with “describing material conditions that are hard to monetize….We don’t know how to convert them into some kind of cash value.” He doesn’t give examples, but it’s easy to imagine changes in civic or social conditions as falling into this category. Moore then decries the amount of effort that goes into trying to monetize these effects and says that effort “mostly distorts the information rather than clarifies.” For clarity, he would prefer a concrete description of the effect (presumably something like units of pollutant reduction or increase in graduation rates) to “somebody’s elaborate method to try monetize it.”Dearing shares Moore’s skepticism of monetizing government and nonprofit benefits, and around 42:00 of the recording says, “It’s a very dangerous thing outside of the private sector to have the same enthusiasm for the bottom line.” Later she warns that “it’s so easy to follow the metrics that follow the dollar that it becomes shorthand for a theory of change.”

In this post, I also urged caution about monetizing the impacts of Extension programs. Nevertheless, when they are carefully framed and rigorously executed, dollar-denominated metrics such as cost-benefit analyses allow for comparison across programs. I wonder how Moore and Dearing would advise a local government that is choosing between anti-pollution and education investments when the only impact data they have is a “concrete description of the effects”? How would they balance units of pollutant mitigation with a percentage change in the graduation rate?

Have you read Moore’s new book or at least listened to his talk? What do you think about what Dearing calls our “enthusiasm for the bottom line”? Do we go too far in trying to translate program impacts into dollars? What other contributions of the new book are particularly relevant for Extension?

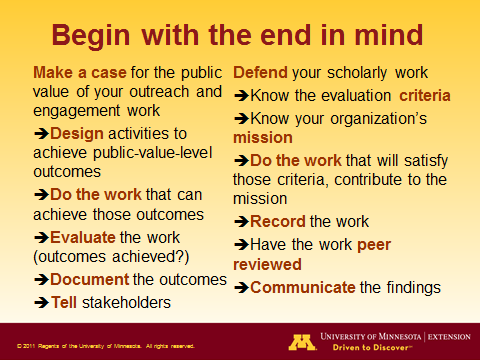

Can public value help you get promoted?

The guidance I came up with is pretty similar to what I wrote about here: begin with the end in mind. Basically, a scholar who aims early on for public value-level impacts and outcomes, and then evaluates and documents those outcomes, will have built a strong case for her work’s public value. Because each step in creating public value involves scholarship–of research, engagement, teaching, and evaluation–the scholar who meticulously documents her contributions should, in the end, be well-positioned to defend her record.

I know, with all of the demands on outreach and engagement faculty, this is easier said than done. I know clients and community-members expect these faculty to engage in activities that are hard to classify as scholarship. But I do think that leading with the end game can help a faculty member prioritize for success.

I also wrote in this blog entry about ways that the public value approach can help close the loop between research and engagement. The research-design-engagement-evaluation loop illustrated in that entry provides a number of opportunities for a scholar focusing on engagement work–in contrast with outreach education–to document her contributions and impact. How did you contribute to (1) the research that underpins the program curriculum, (2) the program design, (3) the engagement itself, (4) the program evaluation? It seems to me that viewing your engagement program in the context of the loop can bring to mind scholarly contributions that you might not have thought to document. Perhaps it can even lead to a more complete promotion and tenure case.

National resources for impacts and public value

First, I think it was NIFA director Dr. Sonny Ramaswamy who mentioned an effort this summer to develop a national portal for impact reporting that will consolidate Extension program impact results.

Second, I believe it was ECOP chair Dr. Daryl Buchholz who highlighted ECOP’s Measuring Excellence initiative. It appears that this project is meant to define and demonstrate excellence and to report impacts for Cooperative Extension as a whole. From the website: “Cooperative Extension has advanced from merely reporting inputs and outputs to documenting outcomes and impacts of its programs. However, most of these measures are tied to specific programs. They are not generally assessed or considered at the organizational level.” While the “excellence” part of the website is well-developed, the pages having to do with impact are still under construction. I look forward to the work that will populate these pages with resources and guidance for Extension impact teams. Meanwhile, I have to give a shout out for the public value statements on the front page of the website!

By the way, my notes from the PILD keynote talks are a little sparse. If I am wrong about which speaker spoke about which initiative, please correct me in the comments. And if you know more about how the portal or the Measuring Excellence project can strengthen Extension’s public value case, please share that, too!

(Photo credit: USDAgov on FlickR)